Authors: Leonardo Pepino, Pablo Riera, Luciana Ferrer

Abstract:

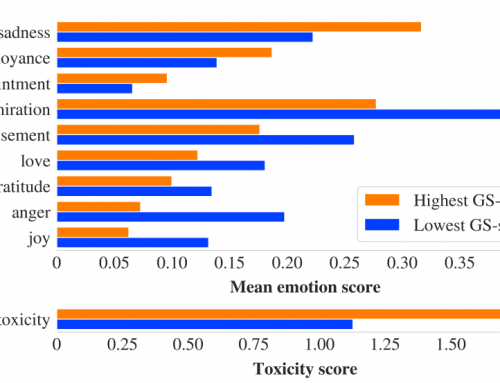

Emotion recognition datasets are relatively small, making the use of deep learning techniques challenging. In this work, we propose a transfer learning method for speech emotion recognition (SER) where features extracted from pre-trained wav2vec 2.0 models are used as input to shallow neural networks to recognize emotions from speech. We propose a way to combine the output of several layers from the pre-trained model, producing richer speech representations than the model’s output alone. We evaluate the proposed approaches on two standard emotion databases, IEMOCAP and RAVDESS, and compare different feature extraction techniques using two wav2vec 2.0 models: a generic one, and one finetuned

for speech recognition. We also experiment with different shallow architectures for our speech emotion recognition model, and report baseline results using traditional features. Finally, we show that our best performing models have better average recall than previous approaches that use deep neural networks trained on spectrograms and waveforms or shallow neural networks trained on features extracted from wav2vec 1.0.

More information: https://www.isca-speech.org/archive/interspeech_2021/pepino21_interspeech.html